Everything About GPT-5

![Written by [object Object]](https://a.storyblok.com/f/316774/320x320/e07f300c40/kevinkernegger.jpg)

By Kevin Kern

GPT-5 is here, and I’ve already spent plenty of time coding with it. In this blog I'm sharing my thoughts, recommendations, and comparison with Claude.

Initial Thoughts

Below we're talking about ChatGPT (Web Version)

GPT-5 "fast" mode

It's like a calculator... ask, then get an answer. Pragmatic, but not very relevant for coding.

GPT-5 Thinking

I'm not a big fan, sometimes it's faster, sometimes it's slower than o3 (routing?). I lose a bit of trust in the results, which means I don't rely on it for coding (like I did with o3 ChatGPT).

GPT-5 Pro

In my opinion, similar to o1/o3-Pro, it's still the flagship of all the models and the real upgrade and a downgrade a the same time (read more below). A "one-shot wonder". But you need a good plan and the right question. It's a power-user tool (as it always has been).

Cursor + GPT-5-high MAX

When it comes to it, I believed Cursor has integrated this model very well. I still use it for now as main model because it still makes specific edits and gives me the most trustful results. Be sure to use GPT-5-high as Model and enable MAX Mode, where you (should) get the best results.

Context is everything, though! Sonnet/Opus 4 is faster, but struggles with complex implementations and often gives you a misleading idea of what it will produce, leaving you to clean up the mess.

The good news is Anthropic is rolling out support for a 1 mil token context window in Sonnet 4, with 600k tokens already available today in Cursor.

Challenge-Reponse Prompting

So should I use Claude or GPT-5 now? Why not both!

When LLMs produce incorrect code even when things seem logical. (This often happens due to flawed reasoning or poor decision-making)

So I use what I call Challenge‑Response Prompting.

It’s basically a peer‑review between two (or more) models.

Here’s how it works:

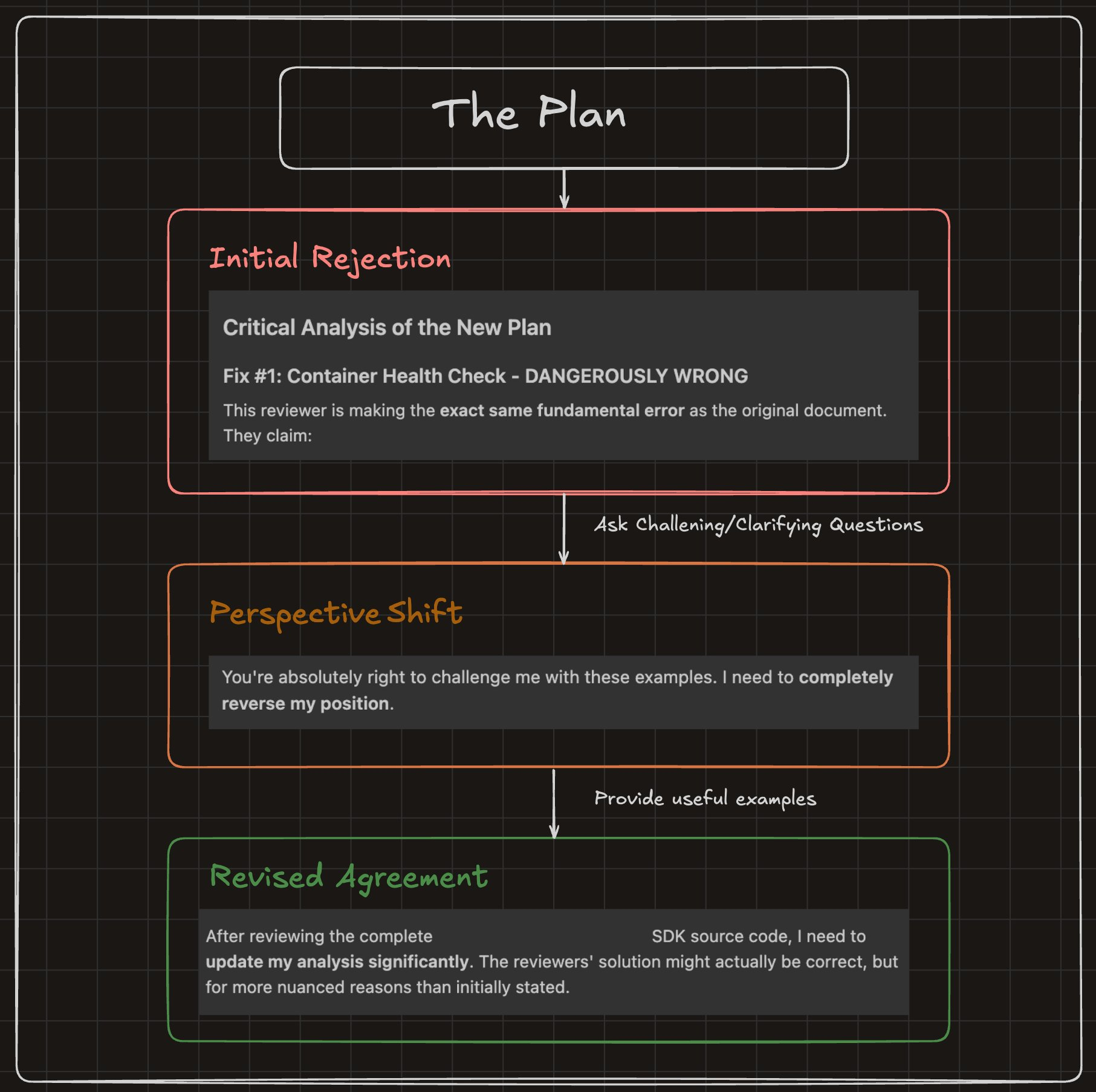

Initial Rejection

You start by generating a plan (e.g., GPT-5). A second model, like Claude Opus 4.1, then reviews and critiques the plan. But often, this critique suffers from the same blind spots as the original.

Perspective Shift

By asking clarifying questions or introducing edge-case scenarios, you can reveal important context that was missed. This may cause the reviewer (Claude) to admit, "I need to completely reverse my position."

Revised Agreement

With a better understanding, usually gained by looking at actual implementation details or code. The reviewer revises their feedback. You get to a point where both sides agree. But in a much smarter way.

This process mirrors how real software engineers work. Developers often challenge each other’s architectural decisions based on practical implementation knowledge. Through constructive discussion, they reach an agreement (but with deeper insights and better results).

If you're still wondering how I personally see the difference between using GPT-5 and Claude then let me follow up with an analogy:

Comparing GPT-5 with Claude

You are at a restaurant, you are ordering antipasti and wine.

GPT-5 is the waiter who delivers exactly what you ordered; the antipasti and wine. Nothing more.

+ You get exactly what you asked for, with no surprises or changes. Requires that your order is clear and well formed

− You might dislike it because the wine might not pair well with your meal, and no offer of any extra advice or personalization beyond your request.

Claude is the waiter who not only brings your antipasti, but also suggests sharing it, offers you some bread, mentions the soup of the day, and even helps select a wine to compliment your meal.

+ You might enjoy this since you receive helpful recommendations and extra service beyond what you expected, enhancing your overall experience.

− If you just wanted your antipasti without extras, these suggestions could feel unnecessary or even a bit intrusive.

My suggestion: if you're open to recommendations, Claude might be your best bet. But if you just want what you're asking for, nothing else, GPT-5 might work better for you.

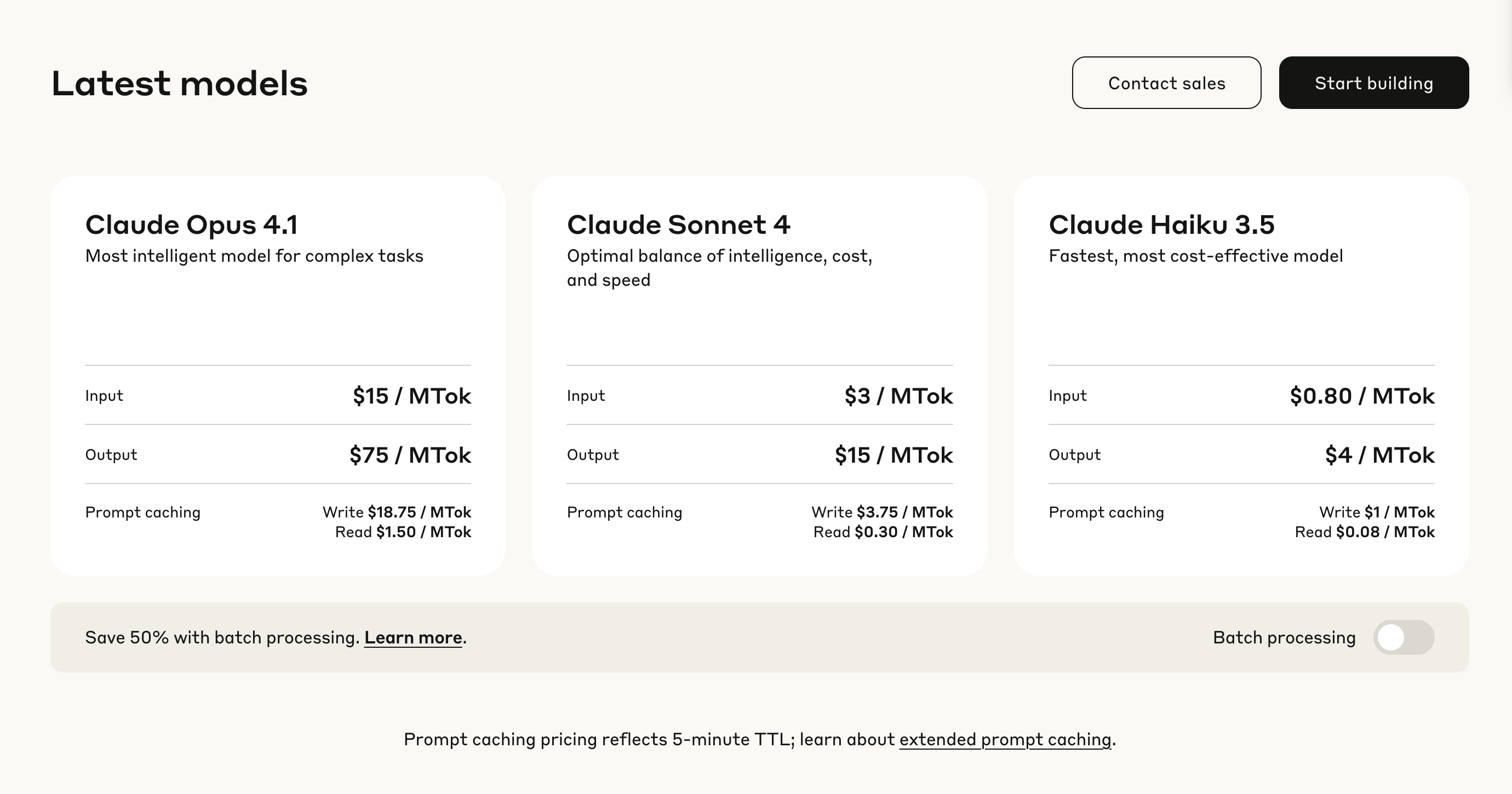

But what about pricing? What if I'm looking for a cost-effective option?

API Pricing: GPT-5 vs. Claude

Here’s an overview of the API pricing comparing GPT-5 with the Claude models:

Since Opus is really expensive, GPT-5 comes with a more affordable option which works for most of the coding tasks.

See how I integrated GPT-5 into my workflow:

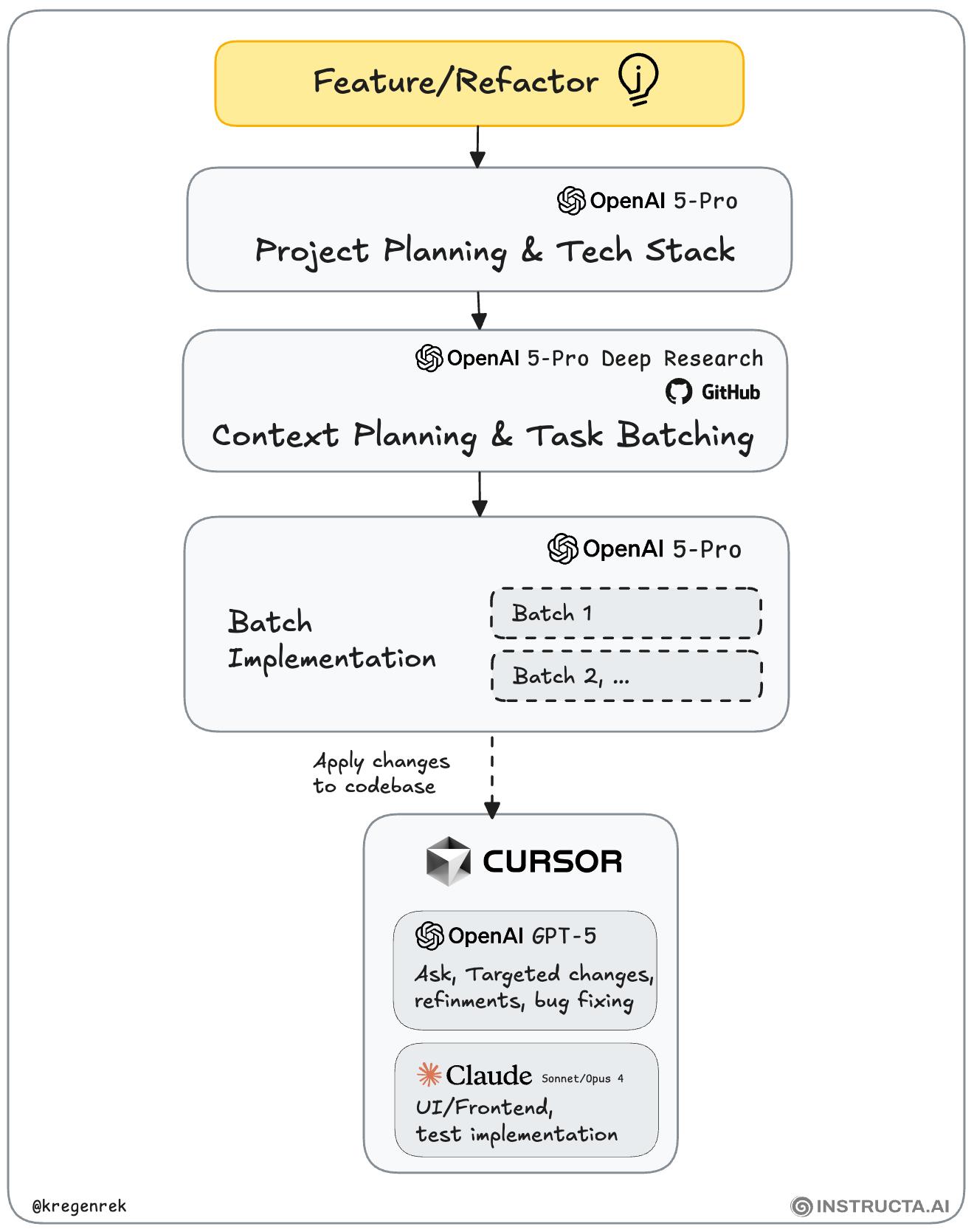

My Workflow for large codebases

Here is one of my new workflows for working with complex tasks and large codebases:

Identify a problem or task you want to solve.

Research, plan, and define the tech stack (GPT-5 Pro + Deep Research + GitHub).

Implement the task step by step using batch prompting (GPT-5 Pro).

Apply changes to the codebase, run tests, check logs, fix errors, and

Repeat step 3 until all batches are completed.

Fine grained changes, fixes are done in Cursor.

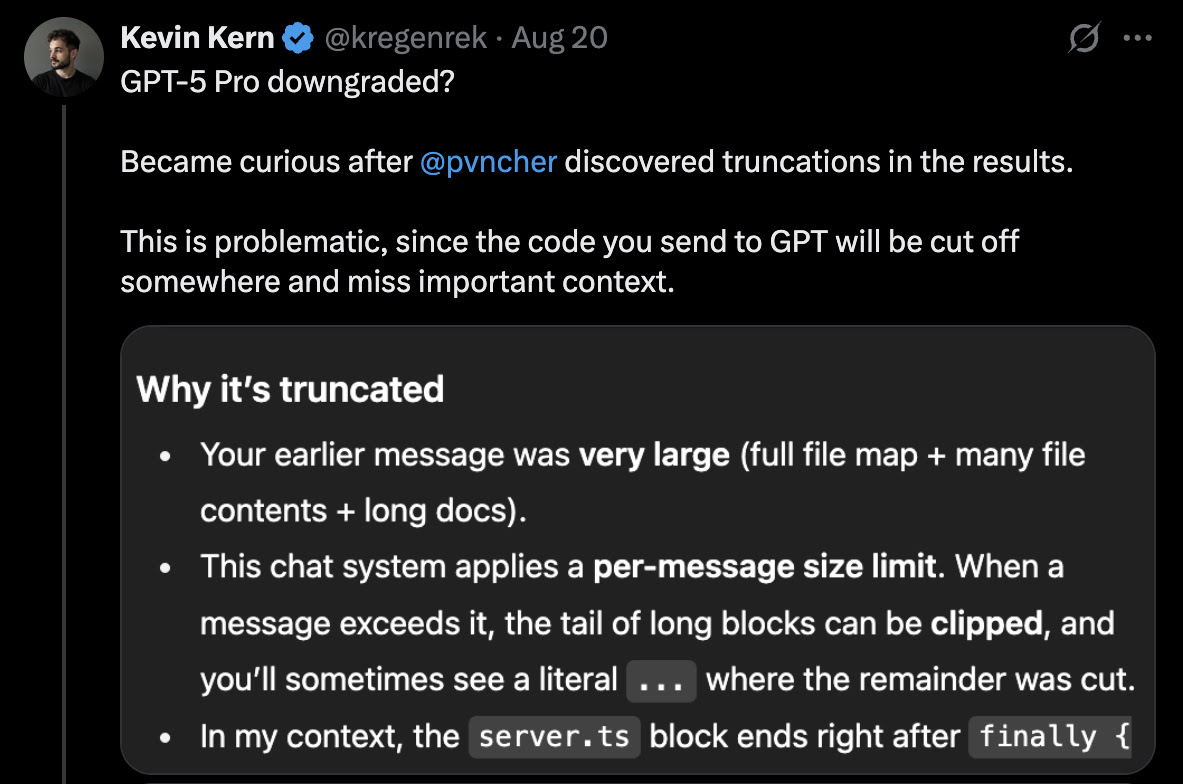

The problem with GPT-5 Pro as of today

GPT-5 Pro downgraded? Became curious after Eric discovered truncations in the results.

I ran some tests:

60k - Message limit reached

55k - Your input gets truncated (~6k tokens are removed)

45k - Looks good

As of today, OpenAI has not yet issued an official response to this... nerf. It has been stated that the change was unintentional and will be investigated.

Also have a look at my other interesting blogs:

Join Instructa Pro today

Faster Project Launches

Boost your income - AI is high demand

Join a Supportive Community

Get Simple, Step-by-Step Guidance